The

Lempel Ziv Welch algorithm (LZW) is a classic compression algorithm published in 1984. It's a simple but practical algorithm that should be under every geek's belt and is often used in combination with other techniques.

The basic idea

Let's start with a plain English description of how this algorithm works. Let's say that we want to compress the following input string:

xxxxyyyyxxxxxxxxxxxx

Compression

If we're lazy, all we have to do to produce a valid output is to represent each letter using 2 characters.

0x0x0x0x0y0y0y0y0x0x0x0x0x0x0x0x0x0x0x0x

According to the compressed language of LZW, when a character pair starts with a "0", that means that the second character is the original letter. This has doubled the size of the sequence, but hopefully this is not the actual output. When the first character is something other than "0", the character pair becomes a reference to some prior long sequence. These references are what will compress the sequence.

In order to compress, we need to use a special table called a "dictionary" which maps 2 character values to the string they represent. Right off the bat, the dictionary will start with the following values:

| Actual string | 2 character value |

|---|

| a | 0a |

| b | 0b |

| ... | ... |

| x | 0x |

| y | 0y |

| z | 0z |

We start scanning through the input string from the first letter and find the longest sequence of letters which are in the dictionary. At this point, that would obviously be the first letter, since the dictionary only have single letters.

xxxxyyyyxxxxxxxxxxxx

After finding the longest string from the start which is in the dictionary, "x", including also the next letter in the input will make the shortest string which is not in the dictionary. This unregistered string is "xx".

We replace the longest string found in the dictionary with the corresponding 2 character value:

0xxxxyyyyxxxxxxxxxxxx

The next shortest string not in the dictionary is then added to the dictionary.

| Actual string | 2 character value |

|---|

| a | 0a |

| b | 0b |

| ... | ... |

| z | 0z |

| xx | 1a |

Now we continue scanning the input string from after the last replacement.

Again, we look for the longest string that is in the dictionary. That would be "xx", which we added in the previous step.

0xxxxyyyyxxxxxxxxxxxx

We replace this string with the corresponding 2 character value and add into the dictionary this string plus the next letter ("xxx").

0x1axyyyyxxxxxxxxxxxx

| Actual string | 2 character value |

|---|

| a | 0a |

| ... | ... |

| z | 0z |

| xx | 1a |

| xxx | 1b |

Repeat the process from right after the last replacement.

0x1axyyyyxxxxxxxxxxxx

This time it was "x" on its own that was the longest string in the dictionary since "xy" was not in the dictionary.

0x1a0xyyyyxxxxxxxxxxxx

| Actual string | 2 character value |

|---|

| ... | ... |

| z | 0z |

| xx | 1a |

| xxx | 1b |

| xy | 1c |

Repeat.

0x1a0xyyyyxxxxxxxxxxxx

Longest string in dictionary was "y", shortest string not in dictionary was "yy".

0x1a0x0yyyyxxxxxxxxxxxx

| Actual string | 2 character value |

|---|

| ... | ... |

| z | 0z |

| xx | 1a |

| xxx | 1b |

| xy | 1c |

| yy | 1d |

Repeat.

0x1a0x0yyyyxxxxxxxxxxxx

Longest string in dictionary was "yy", shortest string not in dictionary was "yyy".

0x1a0x0y1dyxxxxxxxxxxxx

| Actual string | 2 character value |

|---|

| ... | ... |

| z | 0z |

| xx | 1a |

| xxx | 1b |

| xy | 1c |

| yy | 1d |

| yyy | 1e |

Repeat.

0x1a0x0y1dyxxxxxxxxxxxx

Longest string in dictionary was "y", shortest string not in dictionary was "yx".

0x1a0x0y1d0yxxxxxxxxxxxx

| Actual string | 2 character value |

|---|

| ... | ... |

| xx | 1a |

| xxx | 1b |

| xy | 1c |

| yy | 1d |

| yyy | 1e |

| yx | 1e |

Repeat.

0x1a0x0y1d0yxxxxxxxxxxxx

Longest string in dictionary was "xxx", shortest string not in dictionary was "xxxx".

0x1a0x0y1d0y1bxxxxxxxxx

| Actual string | 2 character value |

|---|

| ... | ... |

| xx | 1a |

| xxx | 1b |

| xy | 1c |

| yy | 1d |

| yyy | 1e |

| yx | 1e |

| xxxx | 1f |

Repeat.

0x1a0x0y1d0y1bxxxxxxxxx

Longest string in dictionary was "xxxx", shortest string not in dictionary was "xxxxx".

0x1a0x0y1d0y1b1fxxxxx

| Actual string | 2 character value |

|---|

| ... | ... |

| xx | 1a |

| xxx | 1b |

| xy | 1c |

| yy | 1d |

| yyy | 1e |

| yx | 1e |

| xxxx | 1f |

| xxxxx | 1g |

Repeat.

0x1a0x0y1d0y1b1fxxxxx

Longest string in dictionary was "xxxxx" which resulted in the whole input string being consumed, hence there is nothing left to add to the dictionary.

0x1a0x0y1d0y1b1f1g

0x1a0x0y1d0y1b1f1g

This has resulted in an output string which is 2 characters shorter. Obviously the string was engineered to be compressed. Had it been a longer string then the dictionary would have contained longer strings which would lead to more compression.

Decompression

Compression is quite straightforward and so should decompression. Except that it's a little less straightforward because of a special case that can sneak up on you if you don't know about it (some online sources don't mention it).

If we knew what the dictionary contains then we can simply replace each 2 character value with its corresponding string. But in order to know from the start what the dictionary is we would have to include it with the output string, which would be a considerable amount of extra bytes. Instead, decompression basically consists of guessing what the dictionary contained one 2 character value at a time.

We start with the obvious. The dictionary surely contained all the single letters.

| Actual string | 2 character value |

|---|

| 0a | a |

| 0b | b |

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

The 2 character value is always one of the above initial dictionary (since that is the first shortest string not in the dictionary), so we go ahead and take care of that.

0x1a0x0y1d0y1b1f1g

x1a0x0y1d0y1b1f1g

From here on, if a 2 character value is in the dictionary then we just replace it with its corresponding string. After each replacement we use the replaced string to update the dictionary (as will be shown in an examples further down). The problem is if the 2 character value is not in the dictionary, as is the case now. This is the special case.

The next 2 character value is not in the dictionary. But notice that it is the very next 2 character value that will enter the dictionary, "1a". When this is the case, the following scenario must have taken place:

The input string has been determined to start with an "x", but the following letters are unknown.

x _ _ _

We know that the following letters were replaced with the next available 2 character value (from the compressed string), which means that it must be the shortest string that was not in the dictionary after "x" was replaced with "0x".

This shortest string must have been the last string that was replaced with a 2 character value ("x"), plus the letter after it. So the dictionary must have looked something like this:

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | x_ |

(Notice that the blank is an unknown letter)

So then the first letter of this unknown string being referred to by the 2 character value "1a" is "x". In that case then we know what the second letter is in the input string: an "x", according to the dictionary we're constructing.

x x _ _

But wait, since the 2 character value "1a" is referring to the first "x" followed by the next letter, and since we have determined that the next letter was "x", then "1a" must be referring to "xx".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

In general, every time we encounter this situation, where 2 character value is not in the dictionary and is the next value to be added to the dictionary, the string being referred to is the previous replaced string followed by the same string's first letter. In this case, the previous replaced string was "x" whose first letter is "x", so the string referred to by "1a" is "xx".

x1a0x0y1d0y1b1f1g

xxx0x0y1d0y1b1f1g

After every replacement after the very first (this is the second), we need to update the dictionary with a new 2 character value. The update needs to reflect what was added during compression when the previous replacement (the first in this case) took place. This is because you need to know what letter follows the replacement in order to know which string was added to the dictionary.

Remember that during compression we were adding to the dictionary the shortest string that was not in the dictionary, which consisted of the longest string found in the dictionary (the replaced string) followed by the next letter. After the very first replacement we made, the "x", the next letter in the string is the first letter of the second replacement we made, the "xx". So we add to the dictionary the previous replacement followed by the first letter of the current replacement.

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

Finally we've covered all the steps needed to get repeatin'. Let's continue.

xxx0x0y1d0y1b1f1g

This one is easy as it is already in the dictionary.

xxxx0y1d0y1b1f1g

The previous replacement was "xx", the current replacement was "x". So to the dictionary we add "xxx".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

| 1b | xxx |

Repeat.

xxxx0y1d0y1b1f1g

In the dictionary.

xxxxy1d0y1b1f1g

The previous replacement was "x", the current replacement was "y". So to the dictionary we add "xy".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

| 1b | xxx |

| 1c | xy |

Repeat.

xxxxy1d0y1b1f1g

Next value is the next 2 character value to be added to the dictionary. Add previous replacement, "y", followed by its own first letter. So add "yy".

xxxxyyy0y1b1f1g

The previous replacement was "y", the current replacement was "yy". So to the dictionary we add "yy".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

| 1b | xxx |

| 1c | xy |

| 1d | yy |

Repeat.

xxxxyyy0y1b1f1g

In the dictionary.

xxxxyyyy1b1f1g

The previous replacement was "yy", the current replacement was "y". So to the dictionary we add "yyy".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

| 1b | xxx |

| 1c | xy |

| 1d | yy |

| 1e | yyy |

Repeat.

xxxxyyyy1b1f1g

In the dictionary.

xxxxyyyyxxx1f1g

The previous replacement was "y", the current replacement was "xxx". So to the dictionary we add "yx".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

| 1b | xxx |

| 1c | xy |

| 1d | yyy |

| 1e | yx |

Repeat.

xxxxyyyyxxx1f1g

Next value is the next 2 character value to be added to the dictionary. Add previous replacement, "xxx", followed by its own first letter. So add "xxxx".

xxxxyyyyxxxxxxx1g

The previous replacement was "xxx", the current replacement was "xxxx". So to the dictionary we add "xxxx".

| Actual string | 2 character value |

|---|

| ... | ... |

| 0x | x |

| 0y | y |

| 0z | z |

| 1a | xx |

| 1b | xxx |

| 1c | xy |

| 1d | yyy |

| 1e | yx |

| 1f | xxxx |

Repeat.

xxxxyyyyxxxxxxx1g

Next value is the next 2 character value to be added to the dictionary. Add previous replacement, "xxxx", followed by its own first letter. So add "xxxxx".

xxxxyyyyxxxxxxxxxxxx

Complete.

xxxxyyyyxxxxxxxxxxxx

In practice

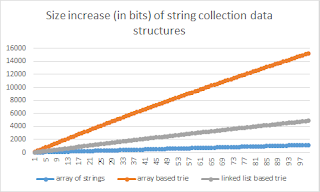

In practice, we do not use 2 character values as that would have a large amount of overhead which reduces the amount of compression possible. Instead we work at the bit level and use 12 bit values, a little over 1 byte. The more bits are used, the bigger the dictionary can be and the longer the strings that are added will be, but this needs to be compromised with the overhead.

You can find code for different programming languages

here.